Quarkus — The next wave of kubernetes native Java framework

During my recent conversation with CIOs, the most common concern is how they can save cloud resources which can give them cost benefits when running applications in containers and Kubernetes. While Spring Boot has long been the de-facto Java framework for cloud native development, but it is also true that the performance benefits provided by a Kubernetes native Java framework is also something which is hard to ignore.

So this article gives you an overview of Quarkus and will help you better understand the problems which Quarkus solve and the reason it’s gaining popularity among the organisations today.

Java Bloatedness Problem

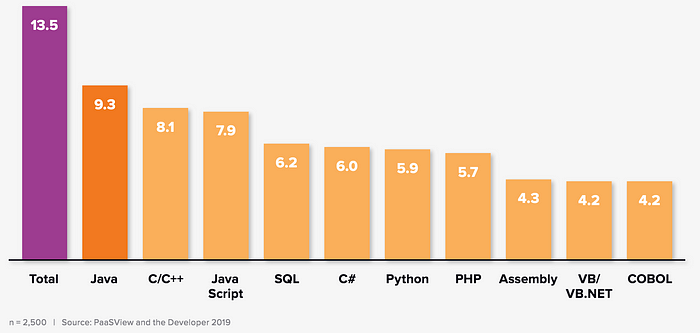

Java continues to be the world’s most popular programming language amongst the software developer community with 9.3 million java developers worldwide.

The reason which has brought this popularity for Java over the years was its ability to run on multiple platforms without making any adjustments to the application source code. So “Write Once Run Anywhere (WORA)” ability of Java has provided the platform independence and the portability to Java applications which has really benefited organisations till containers came to the scene few years back.

Containers have taken the JVM “Write Once Run Anywhere” paradigm and extended it to most other programming languages that exist today. So this means that applications written in any programming language can now leverage containers and Kubernetes for operational efficiencies and decouple themselves from the runtime infrastructure. Since containers are lightweight, have faster startup times, can scale easily and most importantly the portability the containers provides to run application on any kind of infrastructure, which makes JVM less favourable to suit the requirements for modern workloads today. So containers now delivers the portability that was previously provided by the JVM. The downside of running JVMs inside containers is that it adds an additional layer that brings operational inefficiencies to the performance of container based Java applications. Moreover the large memory requirements and lengthy start up times have further made the survival difficult for Java.

So this where Java which was the mainstream technology for over the years, is now finding itself in a spot of bother and is primarily the reason why it is getting harder and harder for the Java based applications to survive today in the Container and Kubernetes world.

In a nutshell the hidden truth is that in the era in which java has survived over the years, the applications were not built for cloud, event driven, or serverless architectures. This is where industry needs a solution to run Java based applications in this new world of microservices, containers, Kubernetes, and serverless.

Quarkus Introduction

“Quarkus” is a kubernetes native java framework build for Java virtual machines (JVMs) and native compilation (GraalVM) runtime. It is built using the container first philosophy which makes Quarkus an effective platform and best suited for cloud, Kubernetes and serverless environments.

The goal of the Quarkus is to optimise Java to run in containers. Quarkus can run in two modes:

a) Native compilation — Quarkus leverages GraalVM native image to compile java applications in to native executable resulting in the fast boot times and reduced RSS (Resident set size) memory. GraalVM is a high performance Java Virtual Machine (JVM) that provides significant improvement in application performance and efficiency which is ideal for microservices and serverless applications.

b) Java Virtual Machine — Quarkus can run in traditional JVM mode as well, still delivers significant performance gains.

The following figure from quarkus.io shows the comparison between the Quarkus Native Vs. Quarkus JVM Vs. Traditional cloud native Java framework. The methodology used to measure start-up memory was to measure resident set size (RSS) in comparison to heap size. Resident set size refers to the amount of memory used by a process as opposed to the amount of memory that has been swapped out. The results shows that there is a significant difference in both RSS memory and boot time between Quarkus native and the traditional cloud-native stack.

Similarly, Quarkus when run in native compilation mode, it can significantly reduce the RSS memory size as compared to when running it on JVM. Quarkus gives you both options, an option to scale up in JVM mode if you need a single instance with a larger heap, or scale out in Native mode if you need more, lighter-weight instances. So which runtime mode to select will depend on the application needs. The performance shown in the figure above is for the illustration purpose and can help an organisation make the right decision in their business context. However there is no one size fit all.

How does Quarkus work?

The figure below depicts the activities which are performed when a traditional Java cloud native framework starts.

So when the traditional Java cloud native frameworks start there are certain set of activities which are performed during the build time and then there are certain set of activities which are performed during the runtime. Now the activities which are performed by the traditional framework during the build time or the compile time is primarily the application packaging part, which is usually done using build tools like maven, gradle etc. And the remaining set of activities like loading of the configuration file, scanning the class path to find the annotated classes and read annotations, reading the XML descriptors, starting the thread pool and so on and done during the runtime when the application starts. So this means that since most of the activities are performed during the runtime instead of compile time, hence the application startup time is more when you run your applications using traditional java cloud native frameworks.

The new way or the Quarkus way to optimise the application startup time is that Quarkus performs most of the activities during the build time instead of runtime. So loading of the configuration files, classpath scanning, read and set the properties etc are performed during the build time. So which means that the metadata is only processed once during the build time and then when your application starts, since all the metadata is already loaded and set during build time, it minimises the need of dynamic scanning and loading of classes during the runtime which ultimately results in the significant improvement in the startup times of the applications. So this is the way Quarkus works behind the scenes and the reason for its supersonic, subatomic nature.

Quarkus Business Value

The business value which Quarkus brings to an organisation falls under three main categories.

a) Cost Savings — With Quarkus Native compilation and Quarkus JVM, organisation realises the low memory utilisation and fast startup times for the application which translates into higher deployment density of kubernetes pods per CPU resulting in the significant cost savings for an organisation.

b) Faster Time to Market — Quarkus improves Developer productivity by significantly reducing the time spent during the development phase for building, deploying and troubleshooting code changes through its Live coding feature. This enables organisation to deliver the solutions to the market faster and hence keeping the edge over the competitors.

c) Reliability — Quarkus is built on the backbone of the proven and trusted enterprise Java ecosystem with an active user community. And with Red Hat as a sponsor for Quarkus, it is a decisive factor for organisations in the selection of Quarkus over other competing technologies.

Why it matters?

- Enterprise Kubernetes platform like Red Hat Openshift becomes more cost-effective as the number of applications that can be hosted on a cluster increases.

- Organisations using Quarkus can do more with the same amount of resources. This means applications using Quarkus can be deployed on fewer nodes than applications that don’t use Quarkus, and the reduction in the number of nodes means that organisations can leverage fewer infrastructure resources to accomplish the same goals, thereby resulting in significant cost savings.

- Traditional cloud-native development stacks, based on the JVM, have large memory footprints and slow start-up times. Quarkus optimises resource consumption with respect to memory and subsequently enables cost savings related to memory consumption.

- Because Quarkus JVM and Quarkus Native enable cost savings due to increased deployment density and optimised memory utilisation, cost related to the cloud-hosting can be reduced as a result as well.

Conclusion

The popularity of Java and the adoption of containers suggest the need to optimise the deployment of Java in container-native and Kubernetes native environments. Quarkus optimises Java for containers by increasing the deployment density of containers due to reduced memory utilisation. Additionally, Quarkus decreases the start-up time for container-based applications, improves application throughput, and enhances developer productivity by means of its unification of imperative and reactive programming.

So the bottomline is that java applications developed using traditional cloud native frameworks had struggled on the cloud today, Quarkus is still keeping Java relevant for the cloud.

In my view the Quarkus wave has already begin and it picking up fast. There are many organisations who have already migrated to Quarkus in their production environment. In the days to come, Quarkus may be the new de-facto Java framework for cloud native development.